Manifold

Get ready for Gabriel to butcher completely a term that already has it's own definition. This time and apply it to so many different applications that are clearly not okay to apply it to.

What Is a Manifold? (Foundations)

Definition

THE BIG COMPLEX CURVE!

A manifold in mathematical terms is, A ~Topological~ Concept in which The big complex curve or tensor or vector or whatever the fuck you want to call it, is given it's own unique spot on the curve. Many things are manifolds. There's the reality manifold and so much more.

[!PDF|] Deep Learning with Python, p.155

Remember the crumpled paper ball metaphor from chapter 2? A sheet of paper represents a 2D manifold within 3D space (see figure 5.9). A deep learning model is a tool for uncrumpling paper balls, that is, for disentangling latent manifolds.

A brief talk about these two passages.

A manifold is a topological space that "locally resembles" Euclidean space

so that means it has a ton of properties that will make it useful to study!

And of course, if we were to drag Godel Escher Bach into this, yes there are quite a ton of paradoxes, especially considering the Banach-Tarski paradox

but so many things are manifolds, and if we start to treat them as integral to how the world works we can just show how powerful math is to figure out these things.

The Manifold Hypothesis (Application)

What is the Manifold Hypothesis

[!PDF|] Deep Learning with Python, p.154

The manifold hypothesis implies that

Machine learning models only have to fit relatively simple, low-dimensional, highly structured subspaces within their potential input space (latent manifolds).

Within one of these manifolds, it's always possible to interpolate between two inputs, that is to say, morph one into another via a continuous path along which all points fall on the manifold.

[!PDF|] Deep Learning with Python, p.156

Your data forms a highly structured, low-dimensional manifold within the input space—that's the manifold hypothesis. And because fitting your model curve to this data happens gradually and smoothly over time as gradient descent progresses, there will be an intermediate point during training at which the model roughly approximates the natural manifold of the data, as you can see in figure 5.10

In other words, we learn to do those transformations, and similarly enough, yeah it will be possible to make this make all this sense. and if we consider the way we interpret the world as a manifold, as a matrix that we would like to do the dot product against our senses, then yeah we'll get pretty close but just like that we will never truly understand the patterns? or will we? We are training our machines on exactly that on our Schema and yet, does that mean we will never get it completely right? It's godel's turtle and achilles all over again. We'll never catch up, our machines will never catch up... Or at least, maybe some enlightened one, some Buddha will figure all this out, and any machine learning algorithm it creates will be just about the same level as me lol. The student never surpasses the teacher. However! interestingly enough, from our loins, there is still the possibility of offspring gaining even more knowledge, so I guess we can think of generations as epochs, but it's our learning material that needs to grow. Maybe it's the Formal Systems we need to outgrow?!

Machine Learning Applications

[!see-also] Machine Learning and Manifolds in DLIP

~by the Manifold Hypothesis~ unfolded to the point where you can classify, and understand the data in some other way.

In Machine learning, It should be painfully obvious how well these ideas translate. I mean, if a wide enough net can quickly approximate a function by lifting, then, i dunno. How would it get through.

[!PDF|] Deep Learning with Python, p.488

the space of all useful programs may look a lot like a continuous manifold.

[!PDF|] Deep Learning with Python, p.155

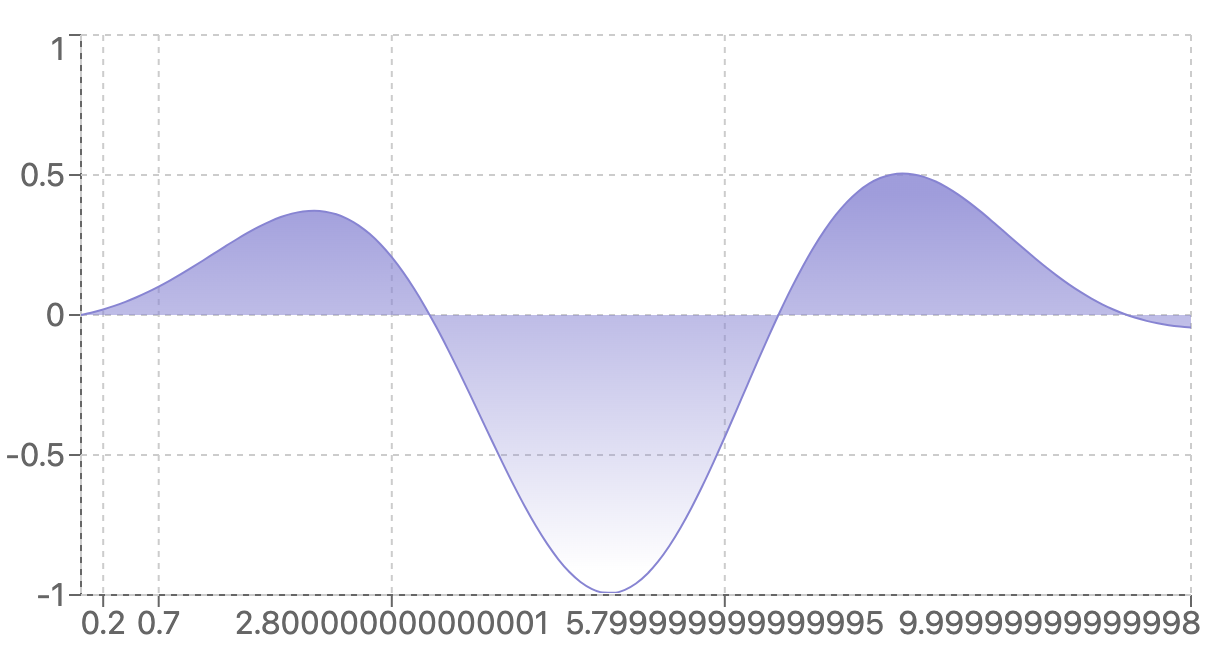

By its very nature, deep learning is about taking a big, complex curve—a manifold—and incrementally adjusting its parameters until it fits some training data points

Learning as a Landscape (Energy Landscapes)

Clearly a manifold is just something that is a multi dimensional matrix where each spot has a unique identifier and is modifiable, often times at we can think of the world topologically too folded heavily,,,

And as such an energy landscape functions as a manifold. It would potentially be easier to understand or conceptually think about when thought of as a 3d landscape similar to the #Fitness Landscapes, with their peaks and troughs

One could even draw the connection to Reaction Coordinate Diagrams:

Now how would we consider this aspect? Let's go through the learning, the reasoning process as a #streamofconsciousness

How could we apply the energy landscape, or any kind of landscape, or manifold to something like learning,

Here is the #streamofconsciousness for the following idea. It's not fully worked out but isn't that what I have notes for? To document my learning process and figure out how it works? The worst would be if I just Jumped to Conclusions and skipped over the whole thought process. Then it wouldn't work at all and you wouldn't see how I came to this Schema or this thought process.

Anyways, how does the landscape translate to learning. I know that theres some sort of future transformation that we have to consider, and yet its not differential. or at least it might be a function of a differential. I still have to figure out what it could be if it uses that as a parameter.

so maybe the differential does some sort of transformation, linear or otherwise on the fitness landscape. I'm thinking of this as if the differential can be considered as the catalyst for making the learning so much faster. So the general idea that I haven't at all written out is as follows:

A landscape as a 3d manifold, for the moment let's consider that the manifold is

WOAH okay hold on i just realized I'm essentially building an Support Vector Machine, or I'm trying to in my mind. Maybe I am not, who knows if I am even using that properly.

Back to it! so, the landscape is a 3d manifold, but we will consider it as a 2d structure for simplicity, honestly its probably even greater in dimensions but it's easiest to represent it as 3d and thus 2d for the elements as established.

Let's say that here we have two peaks and one clear trough, it would be really shit to get stuck in that trough. However! somehow we need to pass this first initial maxima, local maxima, to get to the optimal. how does AI do this? Maybe it functions on the principle of Momentum? Or maybe it uses the differential in some other way. For us to figure this out, Watch me go over to Momentum and explore that a little more.

[!see-also] Momentum I wrote a lot about learning as a landscape and modeled as such. So it might be nice to take a look at that

N+1 Dimensionality (Meta-Framework)

Dimensionality, when you are doing any form of learning you are going to have to lift yourself out of whatever dimension you are in:

[!see-also] Claude

Your thinking shows the hallmark "n+1 dimensionality" - consistently operating one level of abstraction above the topic at hand.

Classically you just need to step back slightly so that you can get to that greater Decision Boundary

N+1 dimensionality is one of the foundational features of being able to Metathink you want to do this because you need to reconsider what you are doing so that you can create a new Formal System that will be able to explain ~~~ explain ish. your present situation. Your present Manifold

There are some thoughts that exist before that where you can talk about religion, and we all accept some level or element of this in Religion where Transcendence directly links to having to explain something that isn't explainable in the dimension you are in. So you have to create one that exists and can explain it. There are certain ways of doing this where you can just jump to some invisible function that just explains it, though obviously if you just accept it, where it might work for some things, you are still accepting a Dogma and this Dogma can easily bee corrupted. SO:

[!bug] Buyer Beware! You don't want to fall for a cult

However by doing small steps back you care creating formal systems slowly and modifying them directly. WE NEED TO ALLOW OUR COMPUTERS TO SLOWLY ADD MORE DIMENSIONALITY. THATS WHY SCALING WORKS. it works because they are slowly adding more dimensionality and they have the space to do it. We could probably achieve better results if we allow it to say, eh this decision boundary is good enough, and it moves back one more dimension and then increases it that way. It should be able to choose to move back.

hmm, how can I explain this in a way that makes sense to me? This is more than Dimensional Expansion and rather its something closer to what was discussed in my Claude 3.7 initial thoughts Lets see, #to-do

Musings and Broader Applications

What's interesting is that machine learning draws and attempts to directly simulate ~our own way of interpreting the world~ AND ITS SO ABSOLUTELY easy to democratize, because. OF FUCKING COURSE. with enough compute, and with the tools that we now have. With even just the base models and so many guides. We will absolutely figure out how we think. because it's just that. I know that I wonder about how I think, so I assume we'll all find someone who equally is curious

The Garden of the Prophet by Kahlil Gibran

Recently I read an excerpt of the garden of the prophet