Manifold Tunneling

[!warning]

IMPORTANT

MANIFOLD TUNNELING IS DANGEROUS BECAUSE YOU ARE ESSENTIALLY DOING AI ASSISTED PRINCIPAL COMPONENT ANALYSIS ON AN EXISTING SCHEMA AND MANIFOLD AS THEY PERTAIN TO A CERTAIN IDEA. AND THIS LOSES NUANCE. YOU ARE DOING A FAST FOURIER TRANSFORM ON AN IDEA AND THAT IS LOSSY

This was an interesting topic to first gain experience with. What was absolutely amazing about this was I hadn't found a name for this, though I had initially believed it was a path that was worth exploring. In this case. I had no clue it really even existed.

As it comes to be. I had Fed Claude my notes and claude came back to me with this. Only after using the prompt claude prompt mobile

[!important] Claude Assisted Warning! Oftentimes it is important to note that I generated these with the help of Claude and my schema. These are often artifacts that are generated with the knowledge and a clear conversation about a certain event. These are typically artifacts that I've asked Claude to sum up the conversation and the new ideas presented. I've read through these thoroughly and very rarely if I do, but most likely would never publish a result of something I do not understand or do not agree with. If so there will be notes about it. I just don't want to go over and write what Claude can succinctly write about our ideation.

This one I worked with a lot and it has been dually assisted. We did some N+1 stuff to figure some of these things up.

Introduction to the Concept

I've been thinking about how we learn complex things, and I'm realizing there's this thing that happens that standard models don't capture well. I'm calling it Manifold Tunneling—when learning somehow bypasses what we thought were necessary steps or dimensions.

It's not just about finding shortcuts through existing knowledge landscapes. It's about discovering entirely new topological properties of the learning manifold itself. Like quantum tunneling but for cognition, where you somehow end up on the other side of an apparently impenetrable barrier.

The Reaction Coordinate View

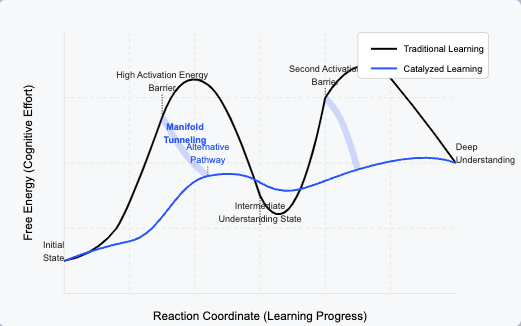

The more I think about it, this is better visualized through a biochemical reaction coordinate diagram than a simple ML loss landscape. In enzyme-catalyzed reactions, enzymes don't just speed up existing pathways—they create entirely new reaction mechanisms that wouldn't be possible at all in their absence.

AI as a cognitive catalyst isn't just accelerating learning along existing pathways—it's opening up entirely new reaction mechanisms that transform the very nature of how knowledge propagates through our mental models.

The traditional learning path (black line) requires overcoming significant activation energy barriers. Catalyzed learning (blue line) doesn't just make these barriers easier to cross—it creates an entirely different mechanism with its own intermediate states and transitions.

This is what ai assisted learning eventually accomplishes. It also helps you find where the real false minimas are faster, since you dont need as much energy to get out of that valley.

AI as a translator, adapts to your schema and that is the reason the reaction for the why activation energy goes away. In conservation of energy, the energy goes to the ai's preexisting weights, like a hammer transferring energy to it's resonance at the end of it's hammer head, it exhausts.

How it works, thoughts?

What I am getting is the idea that this works by creating an N+1 dimension, which you can then use to get to a certain idea, and then comprehend, or jump to another conclusion without having to actually consider in that other formal system what would also make sense but what would require a longer proof.

What is interesting about this idea, is that, you, like in Non-Euclidean Geometries are stating that these rules hold true in this sense, and yet, there is one clear idea that is not holding true. Hence, it makes it non-Euclidean.... But! Remember, what holds true in these equivalent systems, fucking HOLDS TRUE. because they rely on the other BASE POSTULATES. This means that that shit just fucking makes sense, source: Trust me bro

Weird to consider, but it allows for quicker comprehension of diverse topics. Though it might not be as complete, it still fucking holds true! Helpful, like learning through a heuristic ported from another field, another Dimension, another Schema that you quickly Switched back and forth from.

Dimensional Expansion vs. Tunneling

This connects to the concept of dimensional expansion, but they're not quite the same thing:

- Dimensional Expansion: Adding new dimensions to our understanding space, transforming what we can comprehend

- Manifold Tunneling: Moving through the knowledge landscape in ways that bypass what we thought were necessary transitions. See: #How it works, thoughts?

In ML terms, dimensional expansion is like adding new features to your dataset, or even depth or width, while manifold tunneling is like discovering a completely different optimization algorithm helping you reach that point faster and with less effort.

The relationship feels similar to Schema-misses and Schema Adjustment, where encountering contradictions leads not just to patches but to fundamental shifts in understanding.

Real-World Manifestations

I see this happening in several domains:

AI-Assisted Learning

Children learning programming concepts through AI tutoring without going through the traditional pedagogical steps that we considered essential. It's not just faster learning; it's a fundamentally different learning trajectory. YOU ARE GOING THROUGH DIFFERENT DIMENSIONS, you are Manifold Tunneling

Interdisciplinary Breakthroughs

When concepts from one field suddenly illuminate another in ways that weren't accessible through normal progression within either discipline. Like how quantum mechanics concepts suddenly make sense of certain biological phenomena. Similarly this just makes sense and is congruent with the different Schemas.

Personal Understanding

Those "holy shit" moments when seemingly unrelated concepts suddenly click together, bypassing what seemed like necessary intermediate steps. The feeling isn't just incremental improvement; it's like your understanding tunneled through the manifold.

The Cognitive Bootstrap Paradox

This manifold tunneling creates what I'm calling a Cognitive Bootstrap Paradox—where future understanding reaches back to optimize its own development path.

Traditional learning: A → B → C → D → E Manifold tunneling: A --(maybe other dimensions)---→ E (which then helps understand B, C, D)

This is why it often feels disorienting—you've arrived at understanding E without fully processing the intermediate steps, but then E gives you tools to go back and understand them differently.

Learning as Reaction Engineering

If we take this model seriously, we need to start thinking about learning not just as optimization but as reaction engineering:

- Identifying rate-limiting steps in current understanding

- Finding catalysts that create entirely new pathways, or that in conjunction with others can create those novel pathways

- Designing learning environments that stabilize productive transition states, or moving from tunnel to tunnel with less friction

This has massive implications for both personal knowledge management and educational design. It's not just about making learning more efficient; it's about enabling transformative leaps that wouldn't otherwise be possible.

If this is acting in the same way that a catalyst would be, it's creating a structure, a heuristic, that can allow for quicker tunneling. A catalyst exists on another dimension if we consider that dimension understanding how reactions work. It's a dimension of understanding rather than pure reaction. It's meta-gaming the reaction. it's functioning in N+1 space.

Next Steps: Deliberate Tunneling

The question becomes: can we deliberately engineer manifold tunneling experiences? Some approaches I'm considering:

-

Cognitive Reaction Engineering: Identifying activation energy barriers in my own understanding and seeking specific catalysts

-

Cross-domain Immersion: Deliberately exposing myself to seemingly unrelated fields to increase tunneling probability. This is what I do the most.

-

Meta-catalyst Development: Creating frameworks that themselves act as catalysts for future understanding

The truly exciting part isn't just that we can tunnel through knowledge manifolds, but that each tunnel potentially reveals entirely new dimensions we couldn't conceive of before.

Some more examples:

Tool-Assisted Manifold Tunneling

The spade vs. plow distinction is perfect - it's not just about making the same process more efficient; it's about fundamentally transforming the task through the right tools. Here are examples that better capture this tool-assisted tunneling:

- GenBank → AlphaFold Tunneling When working with protein sequences, you can spend months going from sequence → expression → crystallization → structure determination. AlphaFold creates an entirely new reaction pathway where you can tunnel directly from sequence → predicted structure, bypassing the traditional energy barriers completely. BECAUSE you have something that has pieced together some congruent rules of the N+1 dimensional manifold, which allows you to work within those rules to generate some cool shit. While it does one, it is still hardcoded to some level.

- Language Learning + GPT Rather than gradually building vocabulary through flash cards, you can use AI to directly generate authentic context-rich conversations at your current level. This creates a completely different learning reaction mechanism where contextual understanding co-evolves with vocabulary acquisition.

- Obsidian + Graph Visualization Without the graph view, you'd be gradually discovering connections between ideas through repeated review. The graph visualization tool creates an entirely new pathway where you can directly perceive emergent structures in your knowledge that would have taken years of manual connection-making to discover. This is kinda fun, because you are no longer looking at your notes in 2d, a list of your notes, but it expands it to 3d where now you are sorting them by their patterns, their references.

- HMM + PyHMMER The computational acceleration doesn't just make sequence analysis faster - it fundamentally changes how you can approach the problem. Instead of carefully crafting a few high-quality models, you can rapidly iterate across hundreds of potential models and let patterns emerge from the comprehensive analysis.

TL;DR

Manifold tunneling is a cognitive phenomenon where understanding leaps across seemingly necessary intermediate steps, similar to how enzymes create entirely new reaction pathways in biochemistry. It's not just faster learning—it's fundamentally different learning that transforms the knowledge landscape itself. It makes learning easier and faster. It does so through #How it works, thoughts?

2025_03_02_Manifold Tunneling Status: #recipe Tags: cooking recipe